Description of the project:

Cataloging products according to different data (texts and images) is important for e-commerce since it allows for various applications such as product recommendation and personalized research. It is then a question of predicting the type code of the products knowing textual data (designation and description of the products) as well as image data (image of the product).

Resources to refer to:

- Data:

- This project is part of the Rakuten France Multimodal Product Data Classification challenge, the data and their description are available at: https://challengedata.ens.fr/challenges/35

- Text data: ~60 mb

- Image data: ~2.2 gb

- 99k data with over 1000 classes.

Project Report: Machine Learning Pipeline for Text and Image Data

1. Setup and Configuration

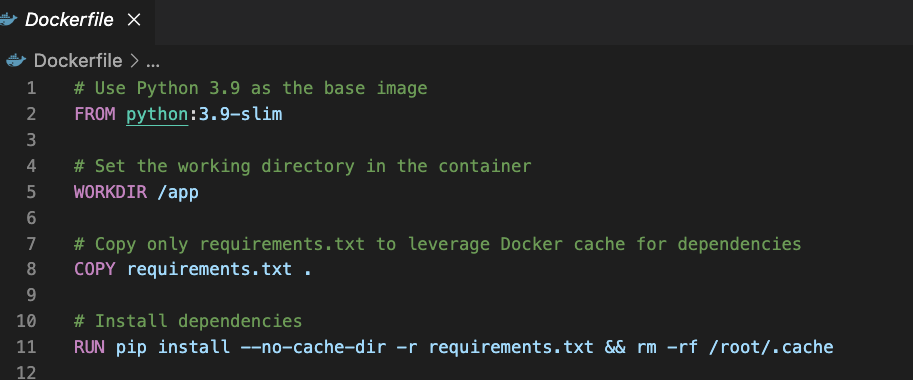

The project begins by setting up essential paths and directories depending on the environment (local or Google Colab). Paths are defined for storing raw and processed datasets, including text data, image data, preprocessed features, model files, and result outputs.

2. Data Preprocessing

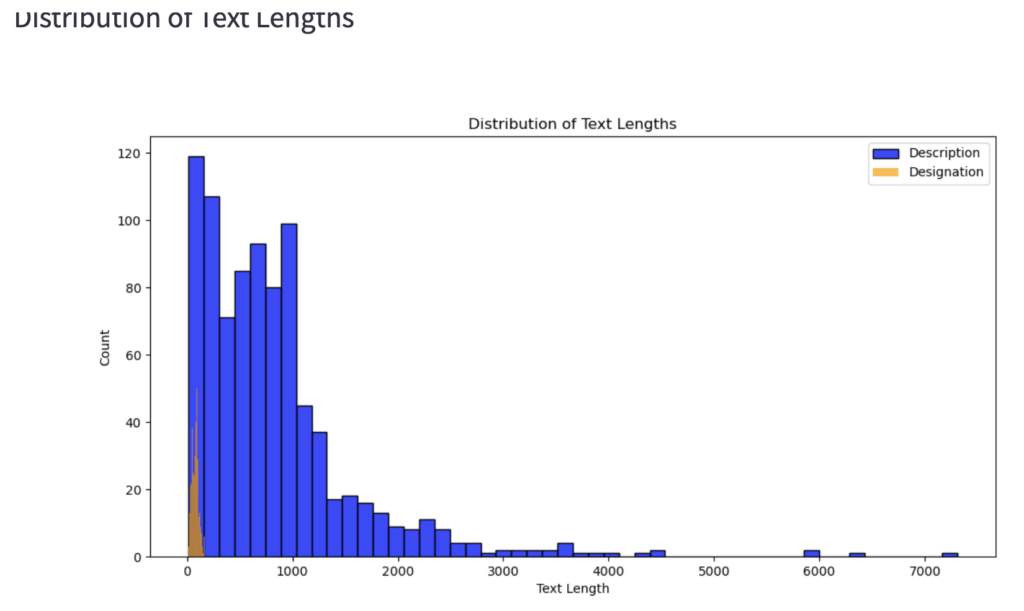

- Text Preprocessing: Descriptions and designations from the dataset are transformed into numerical vectors using pre-trained language models, Camembert and Flaubert. These models tokenize and encode the text, which is then saved as NumPy arrays for faster access during model training. This step captures linguistic nuances in the text to improve the model’s understanding of product descriptions.

- Image Preprocessing: Images are preprocessed in batches to manage memory efficiently. This includes resizing, normalizing pixel values to a standard range, and converting them into arrays. These normalized arrays are saved as

.npyfiles to allow quick retrieval during the model training phase.

3. Dataset Management

- Data Limitation: To avoid memory overload and manage processing times, the dataset size is restricted to a set maximum. This keeps training manageable and scalable.

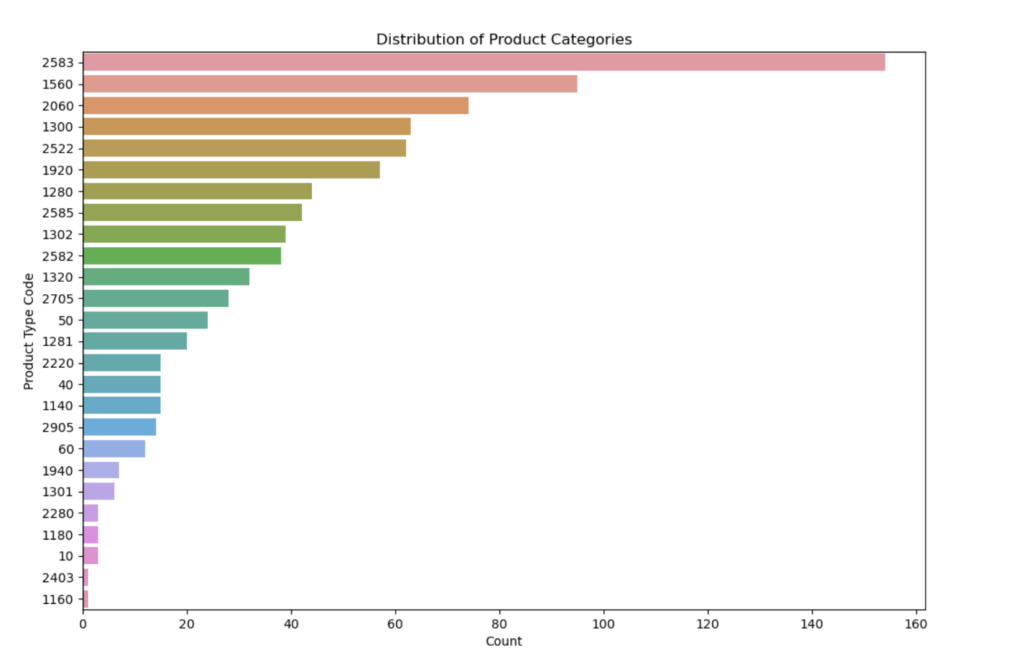

- Class Balancing: A class balancing technique is employed to handle imbalances in the dataset. Classes with fewer samples are oversampled to ensure that the model doesn’t favor more frequent classes. This helps in maintaining equitable performance across all classes.

4. Training and Evaluation

- Data Splitting: The balanced dataset is split into three sets: training, validation, and testing. This allows the model to learn, validate, and be tested on separate subsets of data, thereby minimizing overfitting.

- Model Building: A multi-input neural network is defined using TensorFlow. The model is structured to accept both text and image inputs, leveraging the preprocessed vectors and images. This design enables a comprehensive understanding of the dataset by combining visual and textual features.

- Training: The training process employs callbacks such as early stopping and learning rate reduction, which help to dynamically adjust the training based on performance. Metrics like accuracy and AUC (Area Under the Curve) are used to assess the model’s effectiveness.

- Cross-Validation: K-Fold cross-validation is implemented to further evaluate the model’s performance by training it on different subsets of data. This technique ensures the robustness of the model’s results.

5. Visualizations

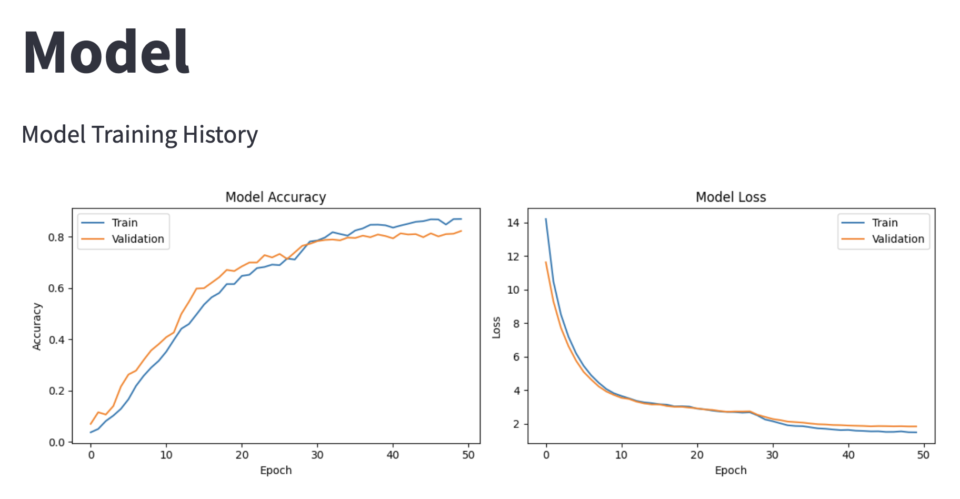

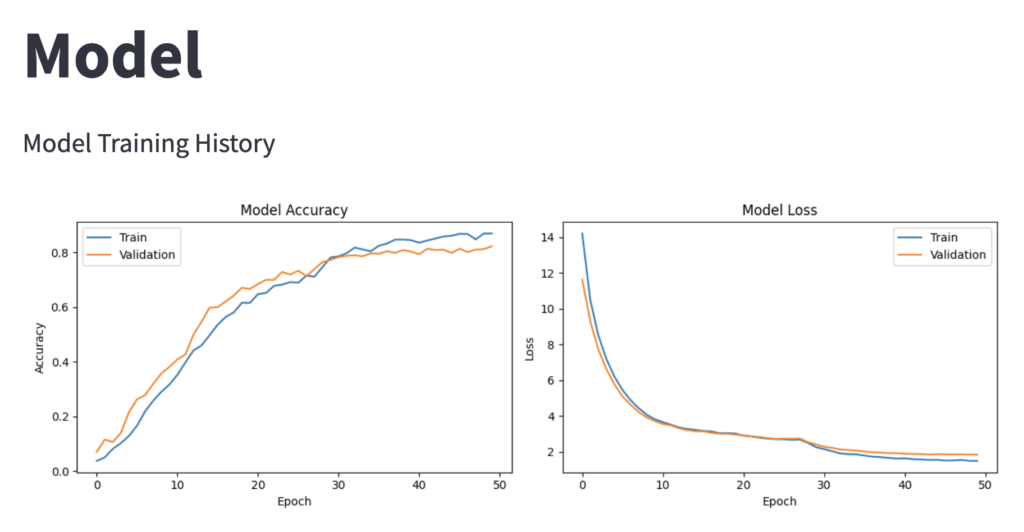

- Training History Plot: Accuracy and loss over the training epochs are plotted to visualize model performance and saved for reference. These plots help in understanding model convergence and identifying potential overfitting or underfitting.

- Class Distribution Plot: Plots of class distributions are generated before and after class balancing to verify the effectiveness of the rebalancing strategy.

- Image Sample Visualization: Preprocessed image samples are displayed and saved, enabling a qualitative assessment of the preprocessing pipeline.

6. Results and Model Saving

- The trained model and its associated metrics are saved for future use. This includes the model architecture, weights, and evaluation results. Storing the model allows easy reuse without retraining.

- Processed data and visualizations are also saved, providing a comprehensive view of the pipeline’s outcomes. These saved results serve as documentation for the project’s end-to-end workflow.

7. Execution and Example Workflow

An example workflow is demonstrated to preprocess the data, balance the classes, split the dataset, and train the model. This section verifies data integrity, checks consistency between preprocessed features, and ensures that the pipeline works as intended.

Alex is an experienced SEO consultant with over 14 years of working with global brands like Montblanc, Ricoh, Rogue, Gropius Bau and Spartoo. With a focus on data-driven strategies, Alex helps businesses grow their online presence and optimise SEO efforts.

After working in-house as Head of SEO at Spreadshirt, he now works independently, supporting clients globally with a focus on digital transformation through SEO.

He holds an MBA and has completed a Data Science certification, bringing strong analytical skills to SEO. With experience in web development and Scrum methodologies, they excel at collaborating with cross-functional teams to implement scalable digital strategies.

Outside work, he loves sport: running, tennis and swimming in particular!